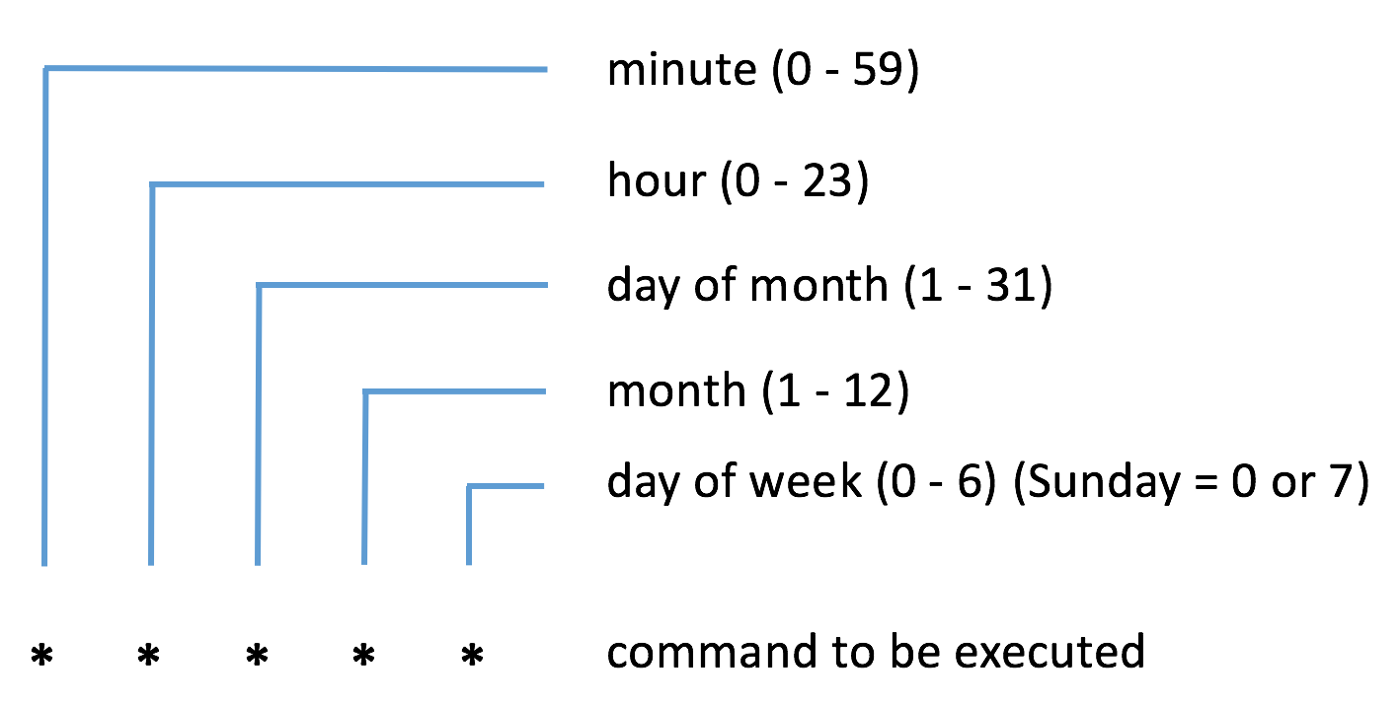

Task schedule configuration (Cron-like)

I've rethink schedule run flow and decide to focus on Cron like style. User can still select from simple options like 10 mins, 1 hour, 1 day but they will be stored in Cron format. I found great python lib to simplify this work - Croniter ( https://github.com/kiorky/croniter ). It allows us to validate cron expression and calculate next run time. In scheduler we will check next run time and start task processing if time is less or equal and task status is "waiting". ./backend/controllers/task.py ... @task_bp.route('/start', methods=['POST']) @login_required def start_tasks(): task_payload = request.get_json() task = Task.query.filter(Task.id == task_payload['id'], Task.user_id == current_user.id).first_or_404() if not task.config or not croniter.is_valid(task.config['trigger_value']) : abort(400, 'Invalid Trigger Setup') task.status = 'waiting' base = datetime.now...